Limited access spaces in aircraft manufacturing present several issues for the mechanic. First, it is difficult to complete manufacturing tasks in a timely manner due to the confined nature of the aircraft, and second the limited access has potential adverse health effects on the mechanic. However, pure automation is impractical due to the complex geometry and variation of the workpiece. Thus, a robotic proxy is needed, where a mechanic can remotely control or monitor actions of a robot with dynamically varying levels of automation. The intention of the Boeing Advanced Research Center is to present factory mechanics with both increased efficiency and improved meaningfulness of work via robotic “mechanic assistive” devices. As a result, the BARC addresses the design and prototyping of robots for factory integration as well as the algorithm and software issues related to implementing such robots. Confined space robotics spans multiple disciplines, and students from multiple engineering programs at the University of Washington are critical to the success of these robots in implementation.

Projects

- Design and Prototyping

- Dynamics, Contact and Control

- Estimation and Information Fusion

- Vision and Augmented Reality

- Human-Robot Interaction

Design and Prototyping

Respective Student(s): Tony Piaskowy, Parker Owan, Michael Burge, Alexi Erlich

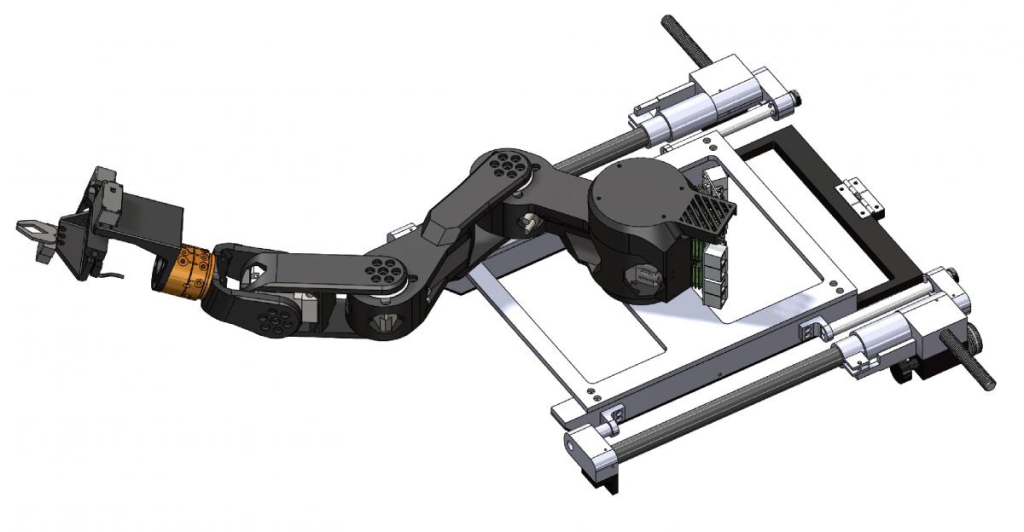

Due to the restrictive physical aspect of confined space aircraft manufacturing tasks, commercial off the shelf (COTS) robots often do not meet the physical requirements required by many spaces and tasks. Thus, some of the work at the BARC focusses on designing and prototyping custom kinematic robots. One such robot for inspection and foreign object debris (FOD) detection was designed and prototyped using 3D printed links and off the shelf actuators and drives.

Solidmodel design of the Inspection SCARA 4DOF Robot and baseplate

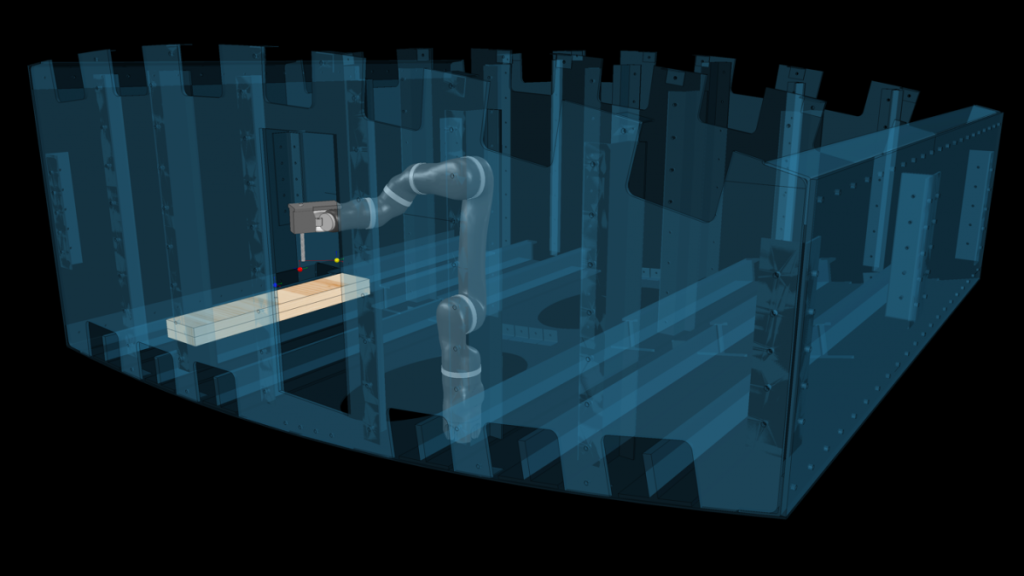

SCARA 4DOF Robot deployed in the engineering wing at the BARC lab

Dynamics, Contact and Control

Respective Student(s): Tony Piaskowy, Parker Owan, Michael Burge, Alexi Erlich

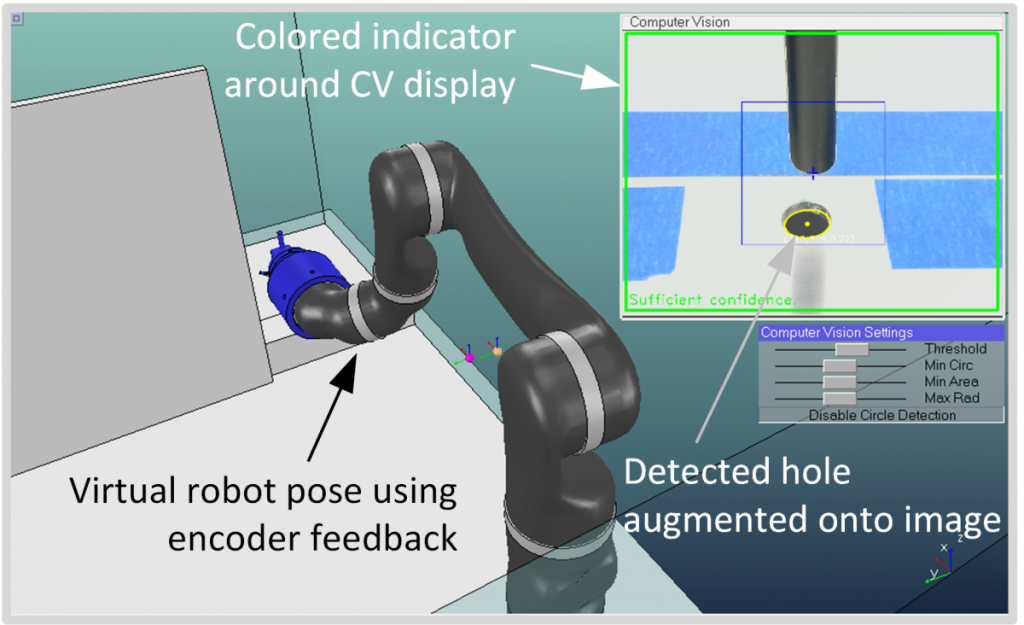

Robots in confined space require special attention to motion control, inverse kinematics (IK), trajectory planning and task planning to avoid collision with the surrounding workpiece. Current work at the BARC seeks to use existing models of the work structure for collision free trajectory planning and active compliance to minimize forces exerted on the workpiece. The approach at the BARC is to construct high fidelity dynamic simulations in parallel with hardware design and prototyping for effective, rapid integration of real-time control software with hardware. As robot complexity increases, it is important for the robot software to be able to compute and adapt to the environment and relieve an operator of mundane aspects of a job, so that the operator can focus effort on performing the task successfully and in a timely manner.

A simulation environment is used to perform computationally intense offline simulations of robot dynamics in the confined environment. The same environment can then be used to efficiently visualize the robot motion in the confined space in real time.

Estimation and Information Fusion

Respective Student(s): Parker Owan

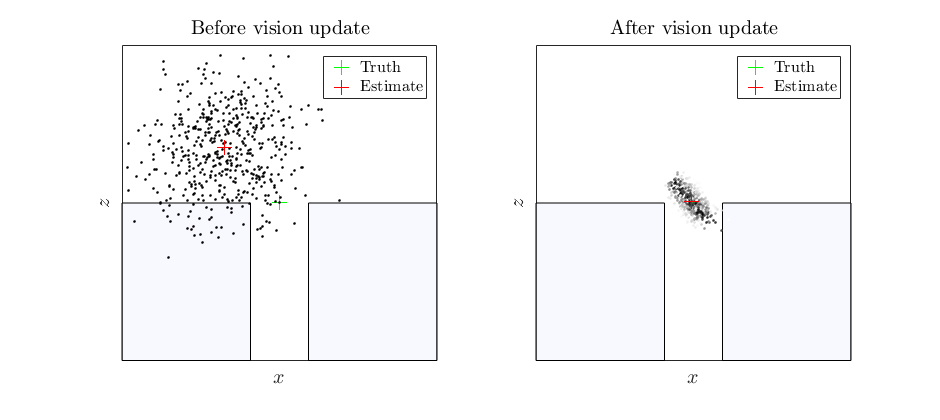

Partially uncertain environments and sensor limitations drive the need for state estimation and information fusion to inform reliable decisions and reactive control. Current work investigates stochastic state estimation to localize the robot in the confined space.

Visualization of a particle filter being used to estimate a target location before and after a computer vision feature measurement occurs.

Visualization of the BARC lab using SLAM and a mobile robot

Vision and Augmented Reality

Respective Student(s): Rose Hendrix

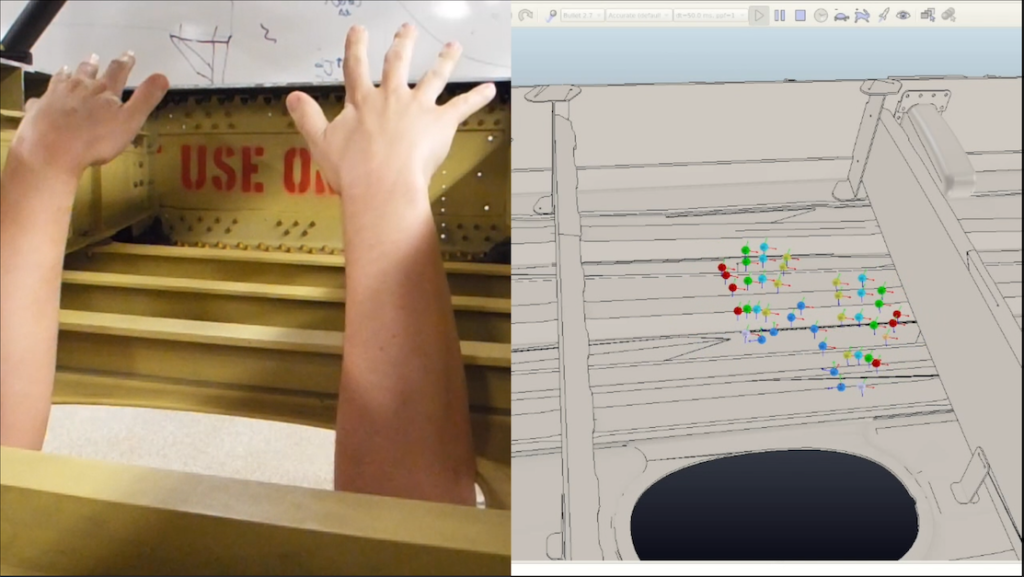

Hand and object tracking is being developed for use in known confined spaces using small webcams. This constructed dynamic environment will be tested for effectiveness as a mechanic aid to working in confined occluded spaces, as well as small screens attached to cameras used as a simplified version of the same. Hand tracking will also be used to test collision avoidance in a shared space with autonomous robots and human mechanics. Augmented reality glasses (Osterhaut Design Group R7s, which can be used with safety and/or prescription lenses) can currently be used for remote visualization using a C1D1 portable camera communicating wirelessly.

Operator hands inside a wing bay (left) and camera based hand tracking (right).

Human-Robot Interaction

Respective Student(s): Tony Piaskowy, Rose Hendrix, Parker Owan

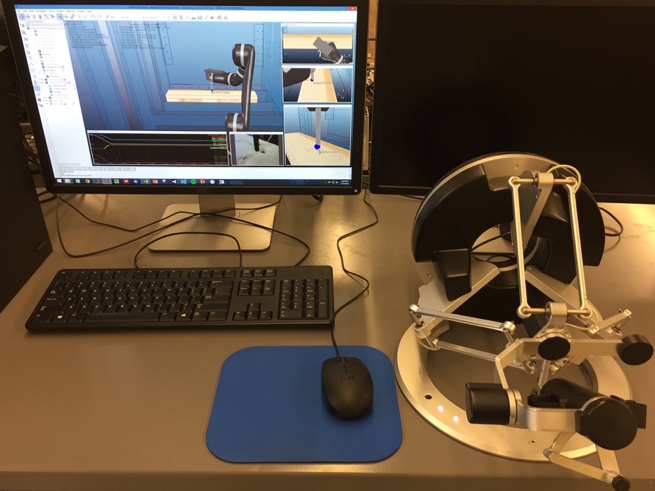

Collaborative robots, or co-robots, is an approach where the robot and mechanic work together to solve a problem. Seemless integration of robots into aerospace manufacturing requires intuitive interfaces and improved performance via collaboration for the robot to be accepted in practice. This can be accomplished via physical interaction (as in impedance control) or by remote interaction through haptic force reflection devices or other interfaces. Current work at the BARC seeks to answer when and how control authority should transition between the mechanic and the robot. Further questions include what communication is needed for the robot to effectively convey intent, and in mixed-initiative interaction (i.e., cases where both the robot or the human can request or relinquish control), what should occur in cases of agent disgreement?

Remote workstation for operator including haptic device and visualization

Visualization and Operator Interface for a remotely operated robot showing computer vision and feature detector.